To no one’s surprise, Google has killed yet another popular service. To the dismay of many, that service was Google Domains; see https://9to5google.com/2023/06/15/google-domains-squarespace/. Google Domains was popular because it was inexpensive, simple to manage, no-frills, no-BS. Google Domains did not charge extra for private WHOIS, which kept us from getting offers to submit our domain to over 300 search engines, or fake “renewal” scams. This made it great for all those projects “I’ll get to one day”. No communication was sent by either Google or Squarespace, everyone found out via news stories. The news broke on June 15, I registered domains on June 12 and there was no indication of a sale. Since Squarespace’s prices are almost 2x what Google Domains charges, and its business model is selling website builders, this has left a lot of people looking for a good alternative. Cloudflare gets mentioned because of its price and trust in the technical community.

Since a few of my domains are actually live and pointing to things being used, the prospect of switching a registrar brings a little nervousness. It’s just DNS, nothing ever happens because of that… Fortunately, I have a few domains for projects I’ll get to one day, so I can test with those.

I recommend doing this with two browser windows open, one for Cloudflare and one for Google Domains, since there is a little back-and-forth.

Step 0: Prechecks! Can you transfer your domain? Do you have a Cloudflare account?

The first thing to note is, you can’t transfer a domain within 60 days of registration (this is an ICANN rule). Also, your registration needs to be more than 15 days from expiration, so start the process before then or you’ll need to renew, then transfer. Cloudflare also does not support all the extensions Google does, notably .dev (although they are working in supporting .dev, and should be ready by the end of the summer). I did not check .zip.

You’ll need a Cloudflare account. If you don’t have one, create an account and make sure you do the email verification. You can’t transfer a domain to Cloudflare until you have verified your email. This took me less than 5 minutes overall.

As part of the process, you need to change your nameservers to Cloudflare. This involves DNS propagation and make take up to 24 hours. I have a couple side things on shared hosting, and am using their nameservers, so this is the part which worries me the most. If you’re using a shared host’s nameservers, check their documentation before switching anything, to make sure you don’t need some extra configuration in the host’s setup also.

Cloudflare’s documentation for transferring is at https://developers.cloudflare.com/registrar/get-started/transfer-domain-to-cloudflare/.

Several of my domains are used only to forward traffic to a far less glamorous URL, usually a registration site for an event, which I have to change 3-4 times per year. Cloudflare does support URL forwarding, not as elegantly as Google Domains. You can set this up after my Step 2 below. Cloudflare’s documentation is at https://developers.cloudflare.com/support/page-rules/configuring-url-forwarding-or-redirects-with-page-rules/. That being said, I’d do a transfer between events when the forwarding URL isn’t being used.

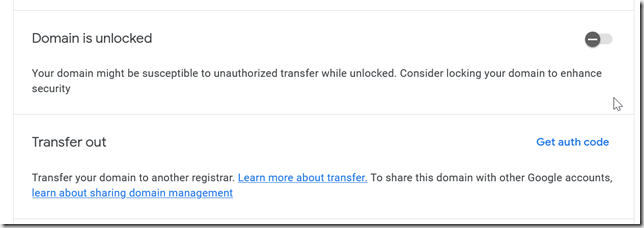

Step 1: Unlocking Domains in Google Domains

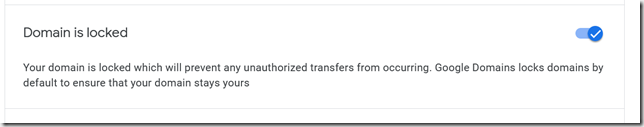

Log into Google Domains, select the domain you want to transfer, click on Registration Settings, then scroll down to the Domain Registration section. By default, Google locked domains from being transferred, so you need to unlock it. In a future step you’ll need a transfer code, this is also where you find that.

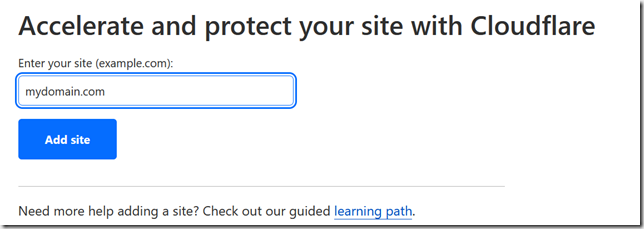

Step 2: Transfer DNS to Cloudflare

Before you transfer the domain registration, you first transfer DNS to Cloudflare. Log into your account, and on the Websites page, click one of the “Add site” buttons. This will start the setup process.

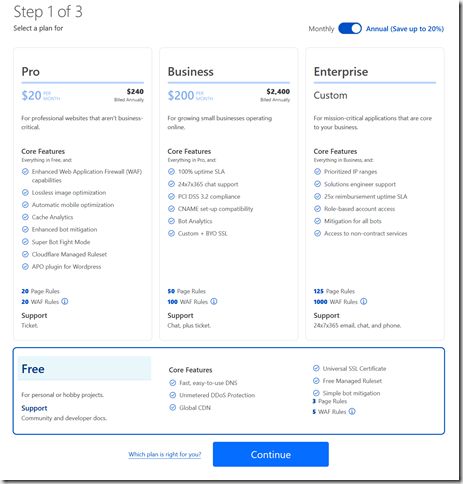

The first step is to choose the DNS plan we want to use. I love places that have free plans for all my hobby projects, so that’s what I’m starting with.

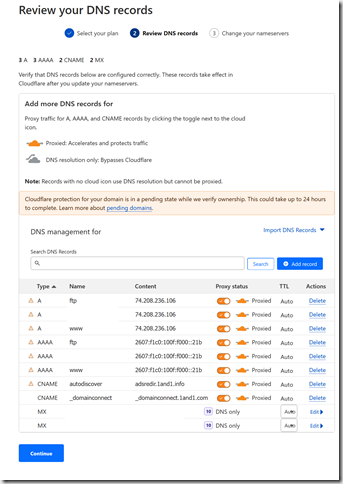

IMPORTANT!! Cloudflare then scans your domain’s DNS entries and gives you an opportunity to confirm them. It’s a good idea to compare the imported records to your configuration. You can also add records, so this is a good time to add a DKIM since GMail is starting to check those (see https://support.google.com/a/answer/174124?hl=en).

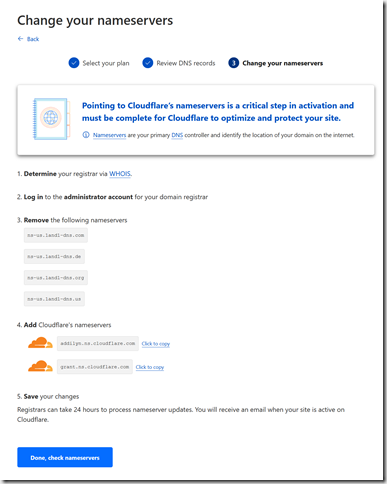

As I said above, this is where you actually transfer DNS to Cloudflare’s nameservers. If you’re on a shared host, double check if you need any additional configuration in your website host when using external nameservers. Bare minimum you’ll need to visit your host to switch the nameserver list.

If you’re using your domain to forward traffic to another URL, you can now set up the forwarding in Cloudflare to hopefully avoid traffic interruptions. Cloudflare’s documentation is at https://developers.cloudflare.com/support/page-rules/configuring-url-forwarding-or-redirects-with-page-rules/.

Step 3: Switch Nameservers

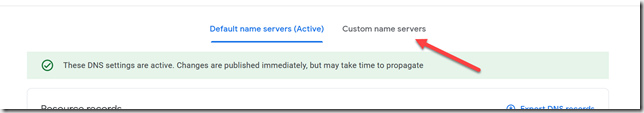

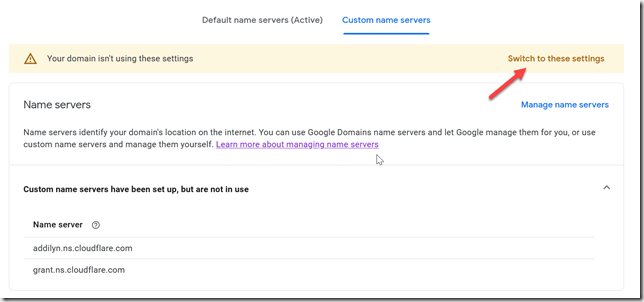

If you’re using Google’s nameservers, go back to Google Domains and visit the DNS page. There is an almost invisible set of tabs at the top of the page, you need to click “Custom name servers”.

Add the nameservers Cloudflare told you to use, and click the “Switch to these settings” link in the yellow alert bar.

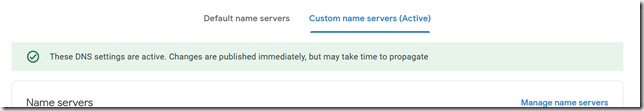

Once you see this, you’re done.

Google’s documentation for this process is at https://support.google.com/domains/answer/3290309.

Every shared host has a different control panel, so you’re kind of on your own for this part. Look up their docs.

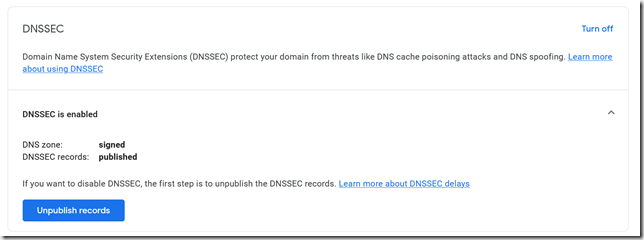

Step 4: Turn Off DNSSEC

Regardless of whose nameservers you’re using, you need to turn off DNSSEC. This is back in Google Domains, on the DNS page.

Click “Unpublish records” and you’re done with that.

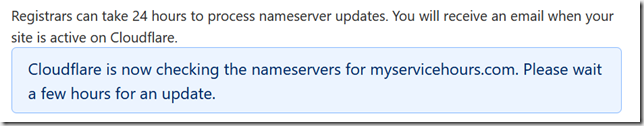

Step 5: Check Nameservers (and wait, probably)

Go back to Cloudflare and click the “Check nameservers” button, and wait for the confirmation email. Despite the note that it may take a few hours, it only took about 10 minutes.

Step 6: While You Wait, Check Payment Info

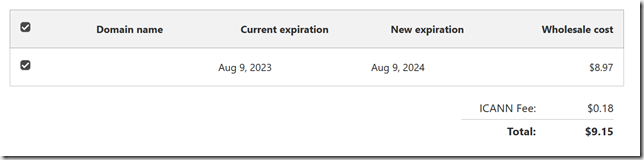

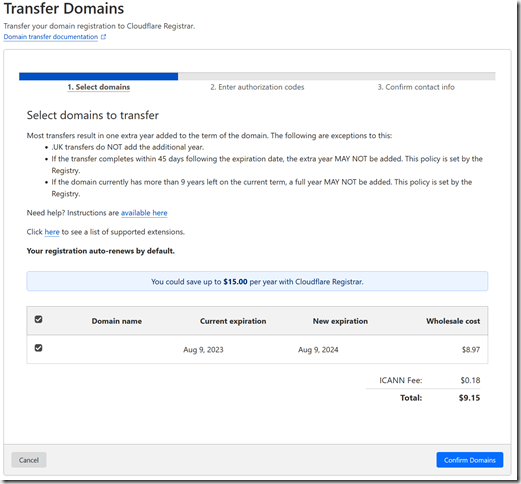

While we’re waiting, check your payment information. If you set up a new account (like I did), you need to have a valid credit card on file in order to transfer a domain. There is a transfer fee, but this also adds a year to your registration (with some exceptions, read the page).

Step 7: Initiate Transfer

After you receive your confirmation email that the nameservers have been updated, log back in to Google Domains and Cloudflare. In Cloudflare, go to Domain Registration >> Transfer Domains, and select the domain you want to transfer, then click Confirm Domains.

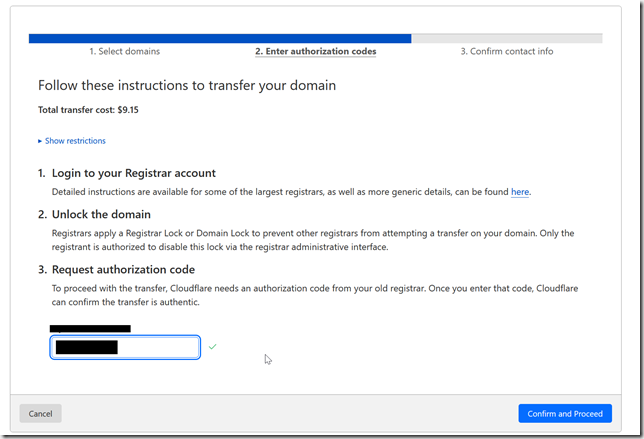

Go back to Google Domains, and perform the following steps:

If you did not unlock the domain earlier, go to Registration Settings and turn off the domain lock.

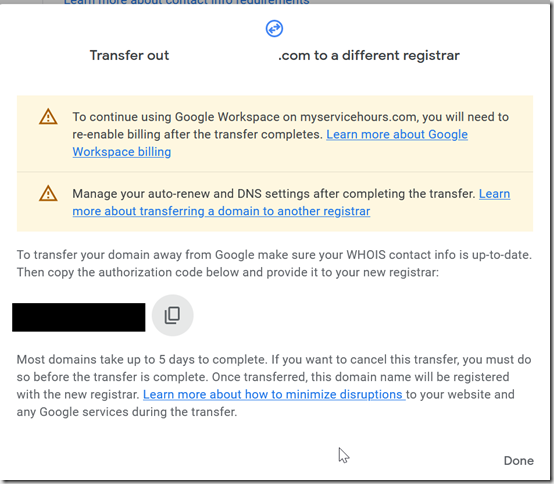

Get the auth code. You’ll have to re-authenticate to Google, and the code will be in a popup window.

Copy the transfer code and paste it into the box in Cloudflare.

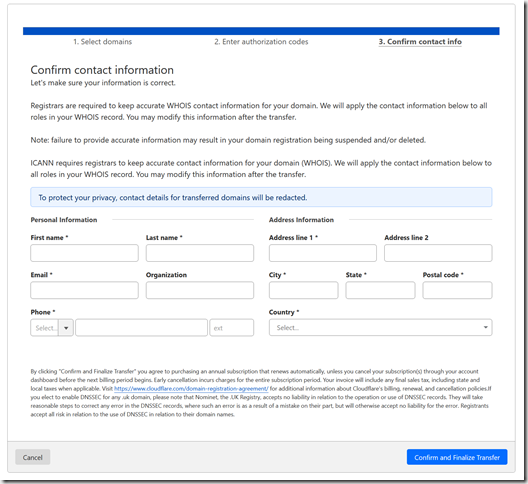

Add your registration details, and click the “Confirm and Finalize Transfer” button. These might be auto-filled if you turned off Privacy Protection, but I wasn’t going to risk exposing my contact information to DNS harvester bot.

In addition to the confirmation page, Cloudflare will send you an email confirming your intent and that you have been charged.

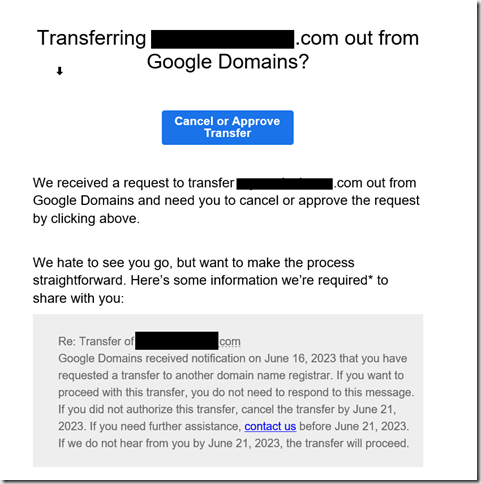

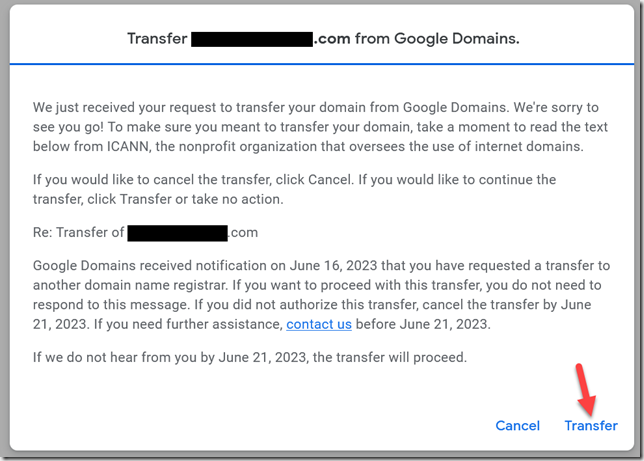

Within a few minutes, Google Domains will send an email for you to approve the transfer. Click that button to open a pop-up in Google Domains, then click the Transfer link.

A few minutes later, you’ll get an email from Cloudflare confirming the transfer is complete.

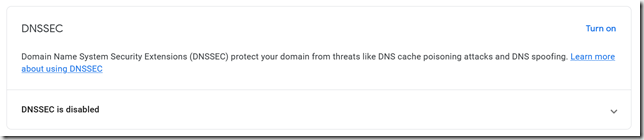

Step 8: Turn DNSSEC Back On

In Cloudflare, choose your domain from the list of Websites, then go to DNS >> Settings, and click the Enable DNSSEC button.