In discussions of data lakes, questions about the differences between and the future of data warehouses inevitably arise. In the modern corporate information factory there is a place for both, and with the availability of managed cloud offerings it’s possible to do both even on a small scale.

Let’s start by reviewing data warehouses. A data warehouse is a highly curated, highly structured database. A data warehouse may use fact tables or dimensional cubes, and the ETL process is designed to put only the highest quality data into rigid structures.

Data warehouses excel and answering the same questions over and over with high fidelity and high performance. This is an important business capability since many enterprises rely on periodic sales reports or inventory levels or manufacturing details. Additionally, data warehouses are designed to return these answers quickly, and reports can often be run on demand with up-to-the-minute data.

Because they are structured for a purpose, and both ETL and queries are schema bound, changing a data warehouse is not a trivial task. This doesn’t lend itself to just keeping data around to explore “what-if” scenarios or exploratory data analysis. Data warehouses aren’t good structures to keep data around “just in case”, so there are occasions where you’ll find yourself thinking “if only we had the rest of the data!”

Data lakes are designed to be that way to keep the rest of the data in a cheap and explorable way. Data lakes are designed to store large amounts of data in its raw form, and to provide different ways of exploring the data. Although you could set up a data lake for reporting, that’s not the best use of the technologies. Data lake technologies are less suited for repeated answers, and are more designed for exploratory analytics. Data lakes are essentially unindexed heaps, so queries may take some time run when executed on demand. A lot of answers (or inputs to analyses) from data lakes are precalculated using map/reduce jobs.

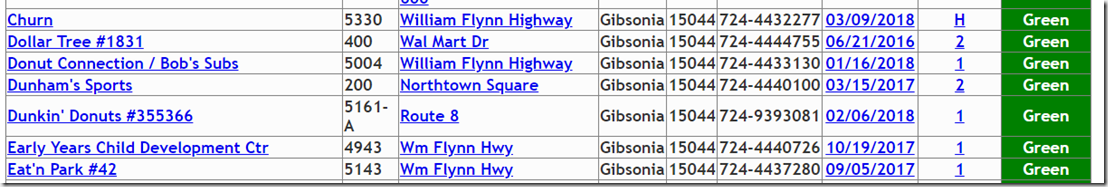

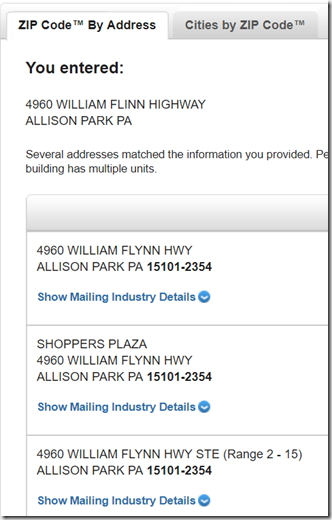

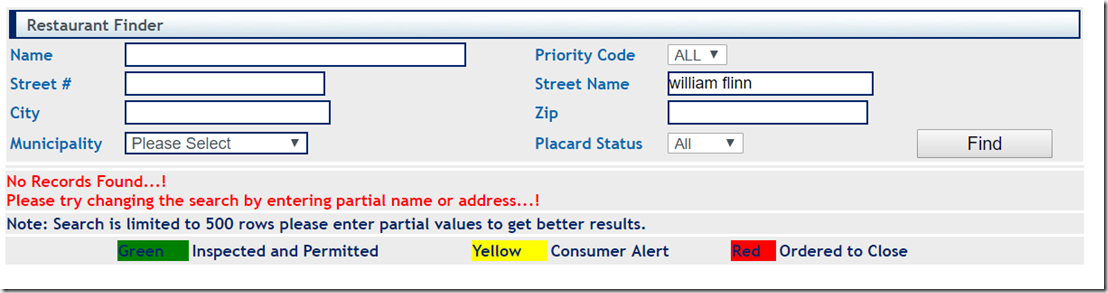

Let’s run thorough a thought experiment and see how a data warehouse and a data lake can work together. Imagine we’re a manufacturing company with a data warehouse which we use to report on manufacturing output, error rates, scrap amounts, etc. Raw data are written on paper forms, and supervisors enter the data into an application. An ETL process takes the data from this application’s database and loads both the data warehouse and the data lake.

Some of the company leaders have been reading studies which show the affect of changing the brightness of lights in a factory on manufacturing output. It has been decided that we’ll run week-long tests with brighter and dimmer lighting, and see which one gives us better results compared to a previous week.

After a week of elevated lighting and a week of decreased lighting, we run our usual reports out of the data warehouse and see that elevated lighting seemed to improve output and decrease shrink by a small percentage, while decreased lighting had a small negative effect. It seems clear that we should brighten the lights to squeeze out a little more productivity. But then someone has an observation–the week we turned the lights up was unseasonably sunny, while the week we turned the lights down was typical crappy January.

How easy would it be to test the effect of weather on our study using our data warehous? Since weather could include temperature, sunlight and precipitation, all of which would need to be added to dimensional models it probably wouldn’t be easy. And since we’re operating on a hunch, the work may not be worth it. However, it would be very easy to download historical data for the weeks of our test, or even for the past several years, into our data lake and run some ad-hoc analyses looking at one or more weather feature. If it turns out we want to add weather to our reports, we can update our data warehouse, ETLs and reports. We could even download forecasts and do some predictive analytics.

The allure of big data technologies isn’t just the ability to work with huge datasets, but being able to run dozens or hundreds of analyses on medium-to-small datasets for analyses just like this. When paired with traditional warehousing, data professionals have two very powerful tools.