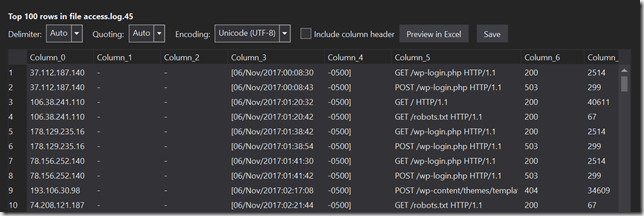

Time for some real profiling, and right away I discover a reason to do this. On my first run my script failed with this error:

Error occurred while extracting row after processing 12360 record(s) in the vertex' input split. Column index: 10, column name: 'user_agent'. ... Invalid character is detected following the ending quote character in a quoted field. A column delimiter, row delimiter or EOF is expected. This error can occur if double-quotes within the field are not correctly escaped as two double-quotes.

I hunted down the offending entry and found this data in the user_agent column (truncated for safety and space):

"}__test|O:21:\"JDatabaseDriverMysqli\":3:{s:2:\"fc\";O:17:\"JSimplepieFactory\":0:{}s:21:\"\\0\\0\\0disconnectHandlers\";a:1:{i:0;a:2:{i:0;O:9:\"SimplePie\":5:{s:8:\"sanitize\";O:20:\"JDatabaseDriverMysql\":0:{}s:8:\"feed_url\";s:3814:\" (lots more I'm not showing here)

This error occurs several times across a number of files, so it’s not just a one-off fluke. Through some searching I found this was a Joomla exploit, but WordPress doesn’t seem to be affected. This is interesting, and confirms exploits are being attempted against sites frequently.

Because this log entry isn’t parsing correctly, and is throwing a fatal error, I need to figure out both how to keep processing past the errors, and also how to catch and log these errors.

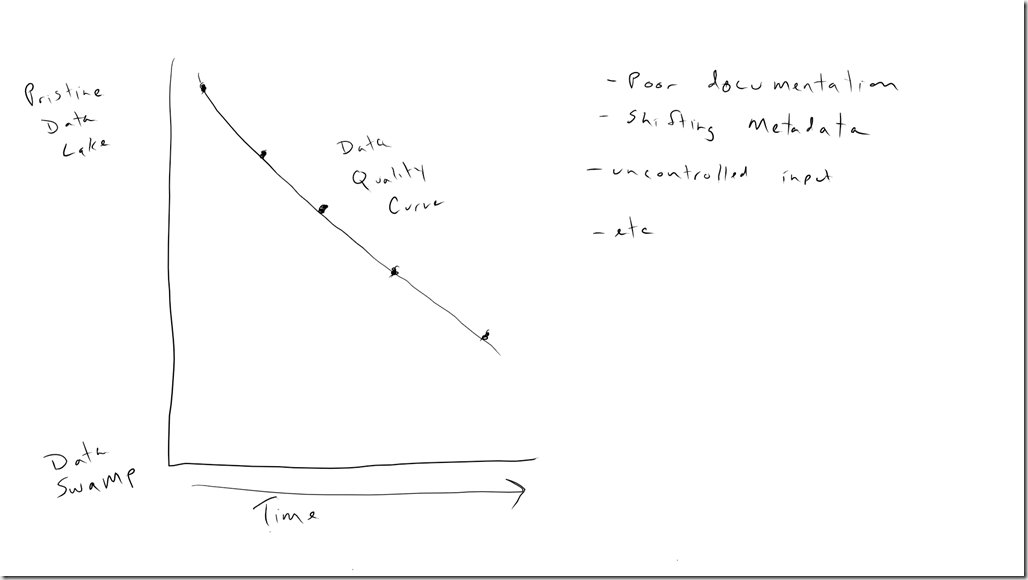

According to U-SQL Error: Unexpected number of columns in input record, there is a “silent : true” option I can add to my EXTRACT statement, but this is meant for times when the column number differs, not other types of errors. Catching other errors is a little more complicated, and according to the same article the best option right now is with a custom extractor, so it looks like I might need that after all. Eliminating invalid entries is one strong reason to have a transformed zone.

User Defined Extractor

Microsoft has some basic documentation on programming user defined extractors. There isn’t any discussion about best practices for structuring a project or unit testing, but there’s a basic example. At the time of his blog post the documentation is pretty lacking, so the rest of this post represents a couple weeks of trial and error. I will be contributing to the documentation, too. The code sample for this project can be found at https://github.com/rjdudley/AzureDLLogParser.

User-defined extractors (UDEs) are one of several types of user-defined objects (UDOs) supported by the Azure Data Lake. If you’re familiar with the common language runtime (CLR) in SQL Server, UDOs are analogous to CLR Objects. If you’re not familiar with CLR Objects or Azure Data Lake UDOs, you just need to know they are both .NET classes which can be called by the query code. The methods in the UDOs can be called like a standard function. Needless to say, UDOs can be very powerful. In fact, one of the “killer features” of Azure Data Lake is being able to easily extend the functionality of the query language using languages and tooling you’re very familiar with.

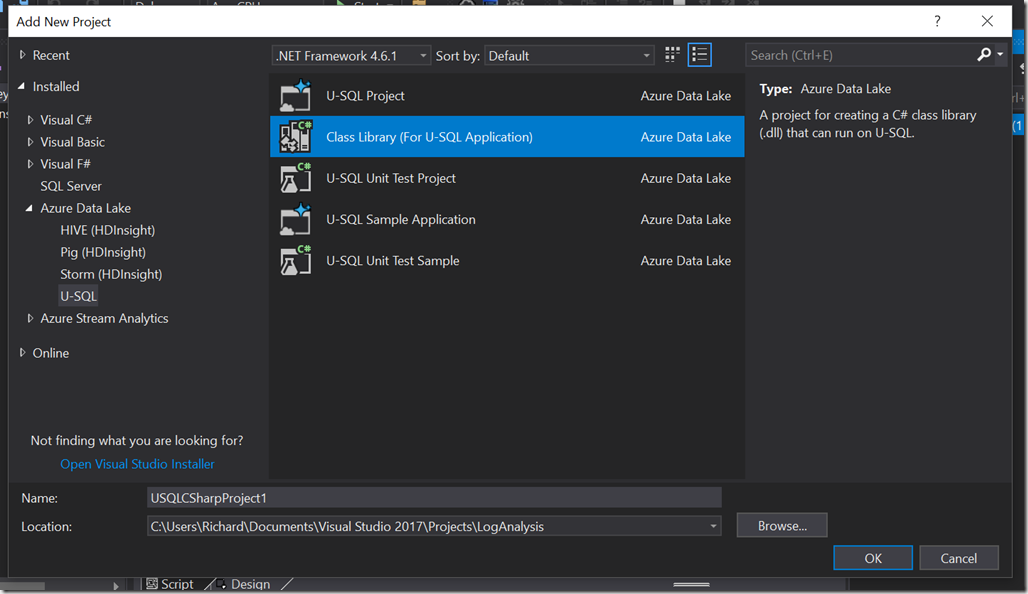

To begin creating a UDE, you start by adding a new “Class Library For U-SQL Solution” to your solution. This is a template project with all the necessary references, you could use a regular class library and then add all the references yourself. Be lazy, use the template.

Since a UDE is a .NET class (in this example, C#), I also recommend unit testing. There is a “U-SQL Test Project” but that’s meant for testing the U-SQL code. Just use a regular class library and include your favorite unit test framework (my sample uses NUnit).

In your UDE project, add a new class file. This class needs to implement IExtractor, and needs to be decorated with the SqlUserDefinedExtractor attribute. This attribute indicates this is the class with the entry point. IExtractor has one required method, named Extract, which as two parameters–input and output. They are exactly what they sound like–input is the data we’ll be acting on, and output is the object we’ll build up through our processing.

public class Class1 : IExtractor

{

public override IEnumerable<IRow> Extract(IUnstructuredReader input, IUpdatableRow output)

{

throw new NotImplementedException();

}

}

Input is an unstructured object, which means processing is completely up to us. Whether it’s deserializing JSON or XML, or splitting on some other delimiter, or anything else, that’s probably the first thing we do. On my case, splitting on a space was not the best solution because of the data structure. Instead, I’ll use a regular expression (yes, I know, now I’ll have two problems) to parse the entry, and then piece the data together in a more coherent manner.

Inside the Extract function is where we do the initial splitting of the input, call our helper methods, and return the output. The SELECT I wrote in Chapter 2 retrieves an entire file at one time, and passes that into the input (refer to https://github.com/rjdudley/AzureDLLogParser/blob/master/LogAnalysis/Script.usql). I then need to split the file on the newline character to get an array of the individual rows, then iterate through the rows, as seen in https://github.com/rjdudley/AzureDLLogParser/blob/master/LogParser/LogFileReader.cs:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| public override IEnumerable<IRow> Extract(IUnstructuredReader input, IUpdatableRow output) | |

| { | |

| string line; | |

| //Read the input line by line | |

| foreach (Stream current in input.Split(_row_delim)) | |

| { | |

| using (StreamReader streamReader = new StreamReader(current, this._encoding)) | |

| { | |

| line = streamReader.ReadToEnd().Trim(); | |

| LogRowParser splitter = new LogRowParser(); | |

| LogRowElements parts = new LogRowElements(); | |

| parts = splitter.ParseElements(line); | |

| // … | |

| yield return output.AsReadOnly(); | |

| } | |

| } | |

| } |

I added two additional classes: LogRowParser and LogRowElements. I did this to make unit testing easier. Most of the actual work I need to do will be done in LogRowParser.

To reiterate, it’s inside the Extract method where we process the input–whether we deserialize XML or JSON, or split a file on a line terminator, or whatever. The result will be a collection of objects, or lines of a file, or whatever, which we then process using additional classes. We build a row of data using output.Set<T>(), which is then passed back into the U-SQL EXTRACT statement.

Returning data back to the EXTRACT statement looks weird but makes sense when you think about it. The “output” object is of type IUpdateableRow, so we have to set the type and value of each column using Set<T>(index, value) method. For this to work correctly, you need to follow the same order as specified in the EXTRACT statement.

Once all the inputs have been processed, we then have a dataset we can perform one or more SELECT statements against. In our model, the results of these SELECT statements would then be persisted in our transformed zone. That’s the next chapter, though.

Once the UDE code is written and our tests pass, it’s time to really test it out. If you’re so inclined you can create a local debugging instance (see https://docs.microsoft.com/en-us/azure/data-lake-analytics/data-lake-tools-for-vscode-local-run-and-debug) but that seems like a lot of effort for limited functionality. Personally I found it easier to deploy to the cloud instance and run there, and you need to get the assemblies there anyway so it’s not wasted knowledge.

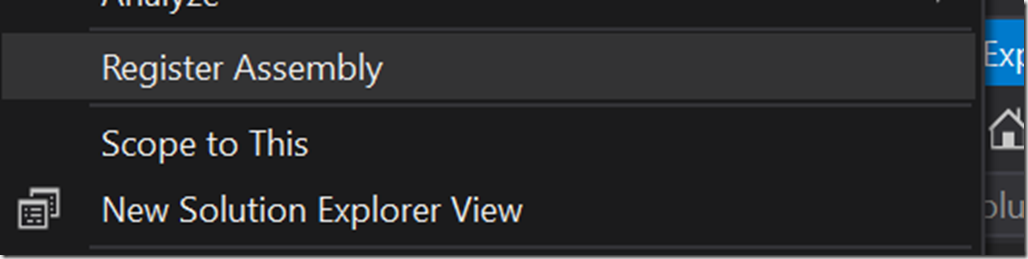

Just as you need to register SQL CLR objects in your SQL Server database, you also need to register UDOs in your U-SQL catalog. Documentation is at https://blogs.msdn.microsoft.com/azuredatalake/2016/08/26/how-to-register-u-sql-assemblies-in-your-u-sql-catalog/.

To save you some time: in Visual Studio, you register assembly by right-slicking on the C# project, choosing “Register Assembly”, change any values you need to (I kept all defaults for the purposes of this blog series), and clicking Submit.

Once your assembly is registered, you can submit your U-SQL script. If all goes well, your results will end up in your transformed zone. This took me weeks of my “after the kids are in bed” time to get this all working.

End Notes

This blog post went through many, many edits as I worked this all out over several weeks, and it’s still terribly stream of consciousness for which I apologize. It took some time to put all the pieces together, so if your stuff doesn’t work at first just keep working on it. As I mentioned above I’ll contribute to the official documentation as well as continuing this blog series.

Turns out using a regex to parse web server logs is a fairly common practice. Most of the examples focus on a pattern for the whole string, such as http://statmodeling.com/regular-expression-for-apache-log-parsing.html and https://www.regextester.com/95830 for a couple examples. My host’s log format is a little different than the Apache combined log format, so I needed to tweak that regex a bit. I used https://regex101.com/ to build my regex, which is saved at https://regex101.com/r/IKP5nb/1. I did try to use this pattern, but it proved to be difficult. I may try again later in this series to improve the code, but to just get the thing working I decided to split on quoted text pattern, using https://stackoverflow.com/questions/17465099/regex-how-to-split-string-with-space-and-double-quote. This means I’ll have to do some manipulations to the data during extraction but that’s an opportunity to learn.