My task this time is profiling the log data. I love profiling data, to me it’s like people watching at the mall. You find a lot of interesting stuff just by looking. The profiling task will actually accomplish four things:

- I’ll get to test recursive or wildcard querying in U-SQL.

- I’ll get to see if the subfolders named for the log year are good idea by how messy #1 is.

- I’ll have some profiled data I can use when I start my analysis queries. I know from past experience that one of the columns in a log file is the byte size of the resource being requested, but not all resources have a byte size (like a default route URI). In this case the server stores a “-” instead of a numeric value. This would break my analytics unless I handled this situation.

- I’ll see if saving results to a flat file in an output folder is a good idea, or if I should switch to database tables. Profiling is an intensive task, and sometimes it’s good to offload objects in memory, or run in parallel. In either of these cases outputting to a flat file isn’t always the best idea.

Before I start any profiling, I need to get my log data out of my files and into a consolidated dataset. That’s the focus of this blog post, the actual profiling will start in the next post.

Results: Getting one file to work

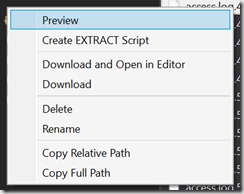

First thing I’m going to do is get data from just one hardcoded file. If I know one file works, I always have a fallback if things are being wonky. The Azure Data Lake Explorer in Visual Studio (not to be confused with the Azure Storage Explorer I mentioned previously, which is a standalone application) has several useful functions on its right-click menu. When I drill down to a file, I can Preview the first 100 lines, which will give me an idea of how the U-SQL EXTRACT function will interpret the file with zero work on my part. The second function is creating that EXTRACT script, which I can use as-is or tweak to suit my needs.

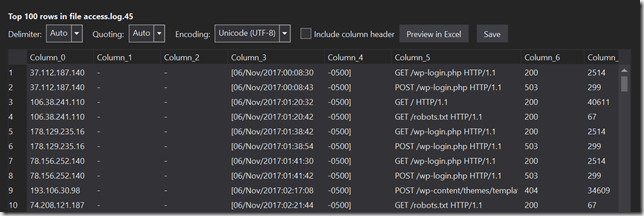

With just a click of a button, I get this, and I can see how the files will be processed. Apache server logs are sort of space delimited, but not really. This is why they’re so hard to process is the standard tools. For instance, the timestamp is wrapped in square brackets, which a space between the time and offset. This results in Column 3 having the date and time, plus a leading “[“, and Column 4 with the offset and a trailing “]”. Also, the method, URL and protocol are all quoted in the log file, so they end up in the same column, Column 5.

Since this log is for a blog hosted on a shared server, I don’t have control over the file format, but the challenges are what makes this project fun.

To get useful data, I’m going to have to clean up and reassemble the timestamp, and also split apart Column 5 into its components. This is exactly why I have a raw ad a transformed zone in my storage–I don’t want to have to do these (and other transforms) in the query every time I want to run some analysis; I’d prefer to run my analyses against the transformed data.

Something to consider at this point is that I could build a user-defined function which would process the rows a little more intelligently (see

Extending U-SQL Expressions with User-Code), and maybe I’ll do that later on. The temptation would be to run analyses against the raw data and use the UDF to basically create an in-memory transformed zone when I am running analyses. I think I’d still want the transformed later and save on the CPU cycles and memory usage.

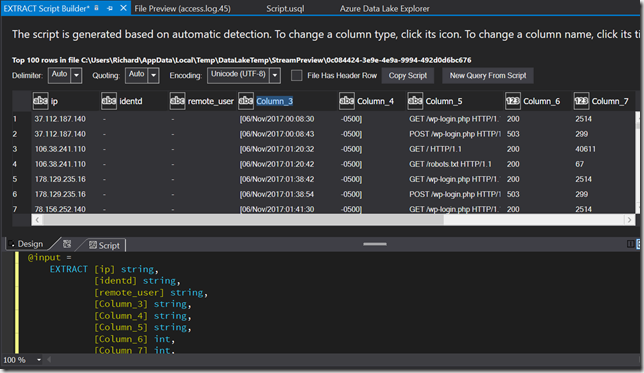

The File Preview also has a “Create EXTRACT Script” button, which opens the EXTRACT Script Builder. Here I can change column names and adjust types. Note that the preview and column type inference is only the first 100 characters, and some excitement probably lurks (which is why I’m profiling in the first place).

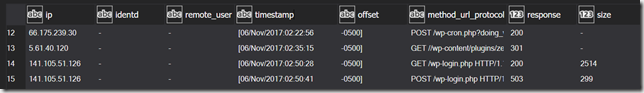

Column 7 is the byte size of the requested URL, which I know to be a possible problem spot. The script builder interprets this as an integer, but scrolling down a few lines my suspicions are confirmed. Two entries have a byte size of “-“, which won’t parse correctly. At this exact moment I can’t decide if I want to convert “-” to zero or null (zero will be counted in averages, null won’t) so for now, since I’m just building a profiling script, I’m going to type this as a string and deal with it after I analyze my profiling.

Now it’s time to profile my selected file. This extract script is being generated to run against the one file I selected to preview, so later on I’ll still need to work in wildcards or recursive queries. As a getting started, one file is perfect.

Before I Submit this script I need to add an OUTPUT statement also, since that’s part of the definition of a complete script in U-SQL. This has the net effect of rewriting the raw file into the transformed folder with a comma between the columns, but that’s good for a first run. Here’s the full script of my initial test:

This file contains bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

| @input = | |

| EXTRACT ip string, | |

| identd string, | |

| remote_user string, | |

| timestamp string, | |

| offset string, | |

| method_file_protocol string, | |

| response int, | |

| size string, | |

| domain string, | |

| url string, | |

| user_agent string, | |

| forwarded string | |

| FROM "adl://redactedprojctname.azuredatalakestore.net/mylogs_raw/2017/access.log.45" | |

| USING Extractors.Text(delimiter:' '); | |

| OUTPUT @input | |

| TO "adl://redactedprojectname.azuredatalakestore.net/mylogs_transformed/input45.txt" | |

| USING Outputters.Csv(); |

I Submit the script, and success! But that’s only one file. How to process all of my logs?

Results: Wildcard and subfolders

This could not have been easier in U-SQL. All I had to do was change the FROM to read as below, and every log file in both folders was processed into one @input. I did not have to make lists of subfolders, then loop through the subfolders and read files one at time like we have to do in C#. U-SQL handled everything for me.

FROM "adl://redactedprojectname.azuredatalakestore.net/mylogs_raw/{*}/access.{*}"

I can start querying the whole dataset now! Your mileage may vary based on how much data you have, but this works for me for now.

For a little more information about using files and filesets, see Files and File Sets as Inputs and Outputs (U-SQL).