It’s “one of those days” project time! I want to run an ASP.NET Core site on AWS, using ASP.NET Core Identity provider for user AuthN/AuthZ. ASP.NET Core Identity has enough features to get started, can be extended, and is free. The most common back-end for Identity is SQL Server, but I want to use a managed database like PostgreSQL instead, because I don’t want to be a DBA this time. Fortunately, switching from SQL Server to PostgreSQL is a simple but not well known.

Although you can add Identity at any time during development, you really want to install and configure Identity preferably before you do anything else, since an EF Core migration is involved. It’ll also set a better baseline in your git history.

(Note: this is an updated and expanded post, based on https://stackoverflow.com/questions/65970582/how-to-create-a-postgres-identity-database-for-use-with-asp-net-core-with-dotnet. A few things have changed since that answer was posted, and additional explanation may be helpful also.)

Step 1: Create the database (use your database IDE)

A. Create a database user, with a password and login permissions.

B. Create your Identity database, and assign user as owner of the database. For the DDL will need lot of permissions, but can configure a least privileged user later.

Step 2: Create your site and install Identity

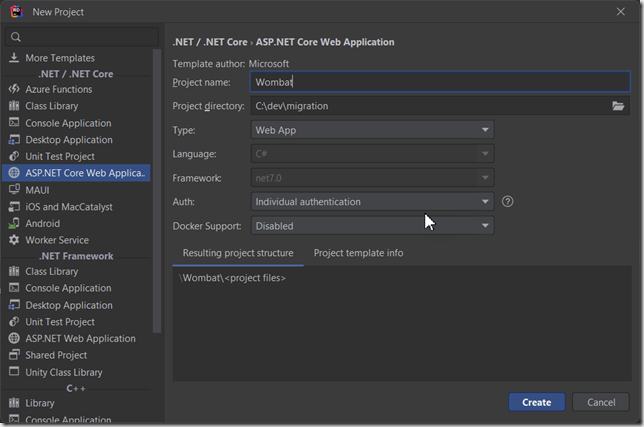

Create an ASP.NET Core site with Individual Identity selected.

If you have a site already without Identity, you can scaffold it, per https://learn.microsoft.com/en-us/aspnet/core/security/authentication/scaffold-identity.

If you create a site with Individual Identity, the DbContext and UserContext are created for you; if you scaffold in later, you’ll just have to add these yourself.

This is a good time to commit to git, in case you need to revert anything we do in the next steps. Or so I’ve been told…

In appsettings.json, set the DefaultConnection to your PostgreSQL instance. For dev, I’m running it in Docker so my string looks like this:

Host=localhost:5432;Username=wombat_user;Password=w0mb@t;Database=wombat_identity

Step 3: Configure the Nuget packages

First, delete the Sqlite package. We don’t need this anymore.

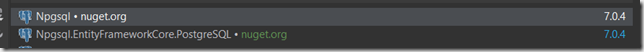

Next, install the latest version of these packages

Finally, set the database provider in Program.cs. Around line 10 you’ll see the AddDbContext line. Change UseSqlServer (or UseSqlite) to UseNpgsql and save the file.

Step 4: Run the EF Migrations

If you need to, install and enable EF Migrations:

dotnet tool install –global dotnet-ef

First, clean up any cruft from the default installation:

dotnet ef migrations remove

Now, create a migration for PostgreSQL

dotnet ef migrations add {a good migration name}

Then, apply the migration.

dotnet ef database update

You should now see all the database objects for ASP.NET identity in your database.

If you threw an error, especially about casting TwoFactorAuth to a boolean, you probably need to re-remove the migrations and try again. This worked for me.

This is an excellent time to commit to git.

The Identity pages live in a magic component and just work. If you plan on extending Identity, or just want the pages in your solution, you can scaffold the pages by right-clicking on the project, choose Add >> Scaffolded Item >> Identity. You’ll be prompted to choose the database context, and then all the pages will be added to your solution. Details on this can be found at https://learn.microsoft.com/en-us/aspnet/core/security/authentication/scaffold-identity?view=aspnetcore-7.0&tabs=visual-studio#scaffold-identity-into-a-razor-project-with-authorization.

At this point, my test site is running fine. It’s possible we’ll hit a snag in some of the more advanced capabilities, so there may be more blog posts to come.