Data lakes have emerged as a promising technology, and continued advances in cloud services and query technology are making data lakes easier to implement and easier to utilize. But just like their ecological counterparts, data lakes don’t stay pristine all on their own. Just like a natural lake, a data lake can be subject to processes which can gradually turn it into a swamp.

Causes of Lake Swampification

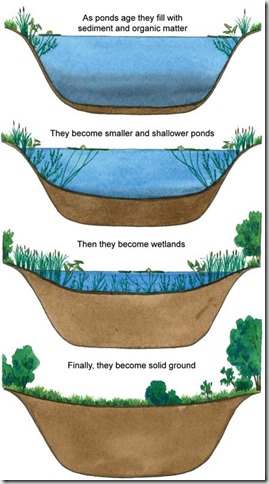

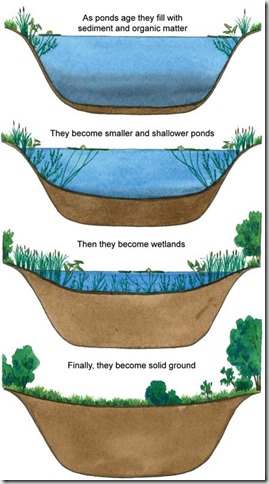

In the biological world, all lakes become swamps over time without intervention. This process is referred to as “pond succession”, “ecological succession”, or “swampification” (my favorite). This process is largely caused by three factors: sedimentation (erosion of hard particulates into the lake), pollution (chemicals which shouldn’t be there), and detritus (“decaying plant and animal material”). Visually, the process resembles the super slo-mo diagram below.

(image and quote from http://texasaquaticscience.org/lakes-ponds-aquatic-science-texas/)

Swamps are ecologically diverse systems, but they can also be polluted and rancid breeding grounds for disease. Because of this, they can be generally undesirable places, and a lot of effort has been expended to keep pristine aquatic systems from becoming swamps.

To extend the lake metaphor into the big data world, data lakes start as pristine bodies, but will require intervention–clean inputs into the system, handling of sediment and rotting material–to prevent becoming a disgusting data swamp. IBM agrees, stating

A data lake contains data from various sources. However, without proper management and governance a data lake can quickly become a data swamp. A data swamp is unsafe to use because no one is sure where data came from, how reliable it is, and how it should be protected.

With data lakes, it’s important to move past the concept that data which is not tabular is somehow unstructured. On the contrary, RAW and JPG files from digital cameras are rich in data beyond the image, there just didn’t exist a good way to query these data. PDFs, Office documents and XML events sent between applications are other examples of valuable non-tabular but regularly arranged data we may want to analyze.

Causes of Data Lake Swampification

Data lake swampification can be caused by the same forces as a biological lake–influxes of sediment, pollution and detritus:

1. In nature, sediment is material which does not break down easily and slowly fills up the lake by piling up in the lakebed. Natural sediment is usually inorganic material such as silt and sand, but can also be include difficult-to-decompose material such as wood. Electronic sediment can be tremendously large blobs with little or no analytical value (does your data lake need the raw TIFF or the OCR output with the TIFF stored in a document management system), or even good data indexed in the wrong location where it won’t be used in analysis. Not having a maintainable storage strategy covering both the types and locations of data will cause your data lake to fill with heaps and heaps of electronic sediment.

2. Pollution is the input of substances which have an adverse effect on a lake ecosystem. In nature these inputs could be fertilizer, which in small amounts can boost the productivity of a lake while large amounts cause dangerous algal overgrowth, or toxic substances which destroy life outright. Because data lakes are designed to be scaled wide, it’s a temptation to fill them with data you don’t want to get rid of, but don’t know what to do with otherwise. Data pollution can also come from well controlled inputs but with misunderstood features or differing quality rules. Enterprise data are probably sourced from disparate systems, and these systems may have different names for the same feature, or the same name for different features, making analysis difficult.

3. Detritus in a natural lake is rotting organic matter. In a data lake, maybe it’s data you’re not analyzing anymore, or a partially implemented idea from someone who has moved on, or a poorly documented feature whose original purpose has been forgotten. Whatever the cause, over time, things which were once deemed useful may start to rot. Schema evolution is a fact of business–data elements in XML system event messages can be renamed, added or removed, and if your analytics use these elements, your analysis will be difficult or inaccurate. There may also be compliance or risk management reasons controlling the data you should store, and data falling outside those policies would also be sediment. Also, over time, the structure of your “unstructured data” may drift.

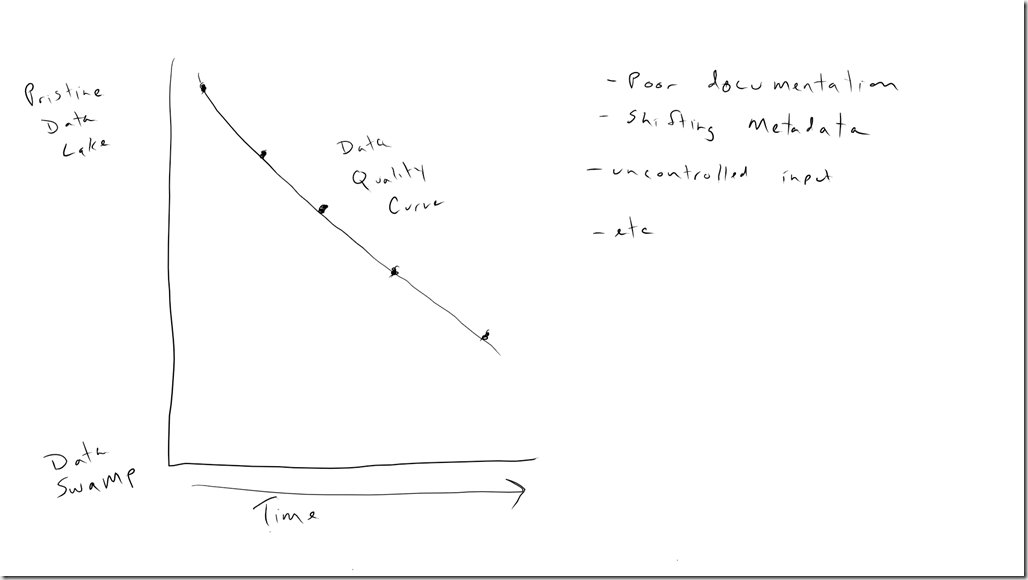

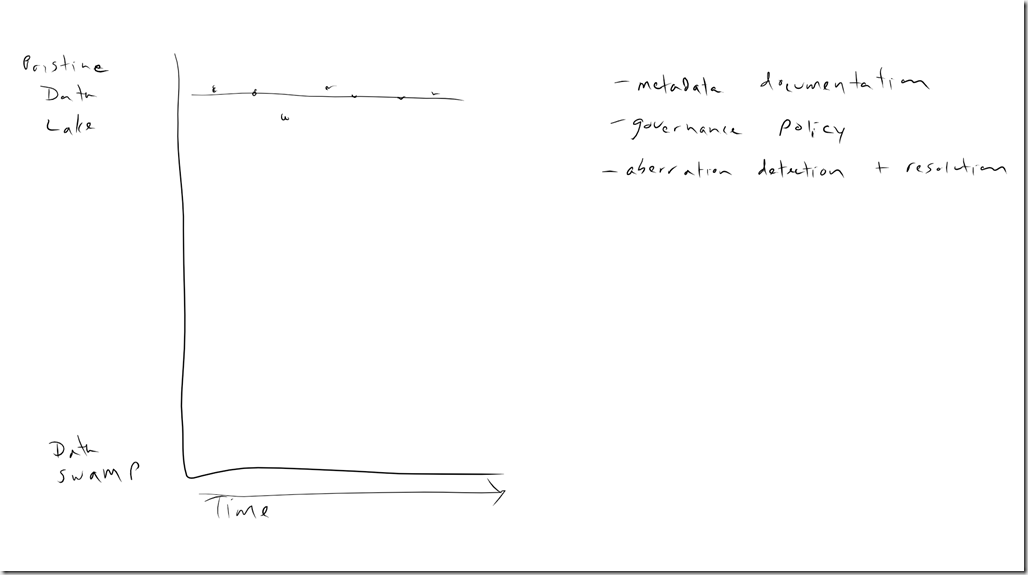

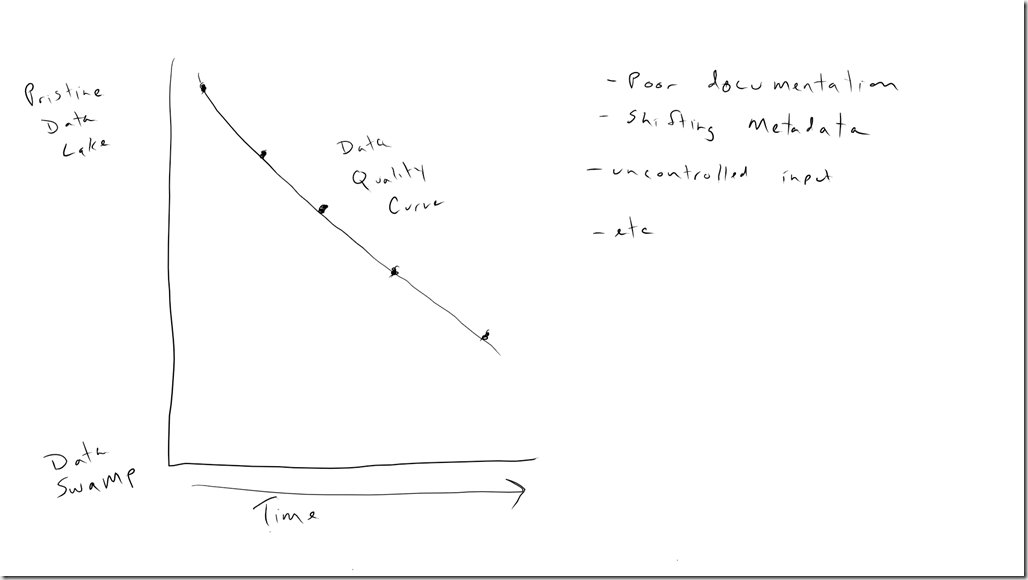

As factors affecting the quality of data in your lake, you can plot a declining “data quality curve” (mathematical models are being developed and may be covered in a future blog post). Fundamentally, the goal is to keep the data quality curve relatively horizontal. Below is an example of a mis-managed data lake, undergoing swampification.

Preventing and overcoming swampification

1. Have a governance policy regarding the inputs to your data lake. A data lake isn’t a dumping ground for everything and anything, it’s a carefully built and maintained datastore. Before you get too far into a data lake, develop policies of how to handle additions to your data lake, how to gather metadata and document changes in data structures, and who can access the data lake.

2. Part of a governance policy is a documentation policy, which means you need an easy to use collaboration tool. Empower and expect your team to use this tool. Document clearly the structure and meaning of the data types in your data lake, and any changes when there are any. The technology can be anything from a simple wiki, to Atlassian’s Confluence or Microsoft’s SharePoint, to a governance tool like Collibra. It’s important the system you choose is low friction to the users and fits your budget. Past recommendations for data lake were to put everything in Hadoop and let the data models evolve over time.

3. Another part of a data governance policy is a data dictionary. Clearly define the meaning of the data stored and any transformations in your data lake. The maintenance and use should be as frictionless as possible to ensure longevity. Have a plan for the establishment and the ongoing maintenance of the data dictionary, including change protocols and a responsible person. If there is an enterprise data dictionary, that should be leveraged instead of starting a different one.

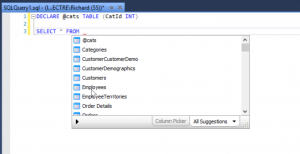

4. Explore technologies with the ability to explore schemas of what is stored and enforce rules. At the time of this writing, the Azure Data Lake can use PowerShell to enforce storage rules (e.g., “a PNG is stored outside of the image database”) and to explore metadata of the objects in the data lake. As the data lake ecosystem grows, continue to evaluate the new options.

5. Regularly audit metadata. Have a policy where every xth event message is inspected and the metadata logged, and implement . If the metadata differs from expected, have a data steward investigate. “A means of creating, enriching, and managing semantic metadata incrementally is essential.”8

For some clarity, PWC says

Data lakes require advanced metadata management methods, including machine-assisted scans, characterizations of the data files, and lineage tracking for each transformation. Should schema on read be the rule and predefined schema the exception? It depends on the sources. The former is ideal for working with rapidly changing data structures, while the latter is best for sub-second query response on highly structured data.8

Products such as Apache Atlas, HCatalog, Zaloni and Waterline can collect metadata and make it available to users or downstream applications.

6. Remember schema evolution and versioning will probably happen and plan for it from the beginning. Start storing existing event messages in an “Eventv1” indices, or include metadata in the event which provides a version so your queries can handle variations elegantly. Otherwise you’ll have to use a lot of exception logic in your queries.

7. Control inputs. Maybe not everything belongs in your lake. Pollution is bad, and your lake shouldn’t be viewed as a dumping ground for anything and everything. Should you decide to add something to your data lake, it needs to follow your processes for metadata documentation, storage strategy, etc.

8. Sedimentation in a natural lake is remediated by dredging, and in a data lake that means archiving data you’re not using, and possibly having a dredging strategy. Although the idea behind a data lake is near indefinite storage of almost everything, there may be compliance or risk reasons for removing raw data.

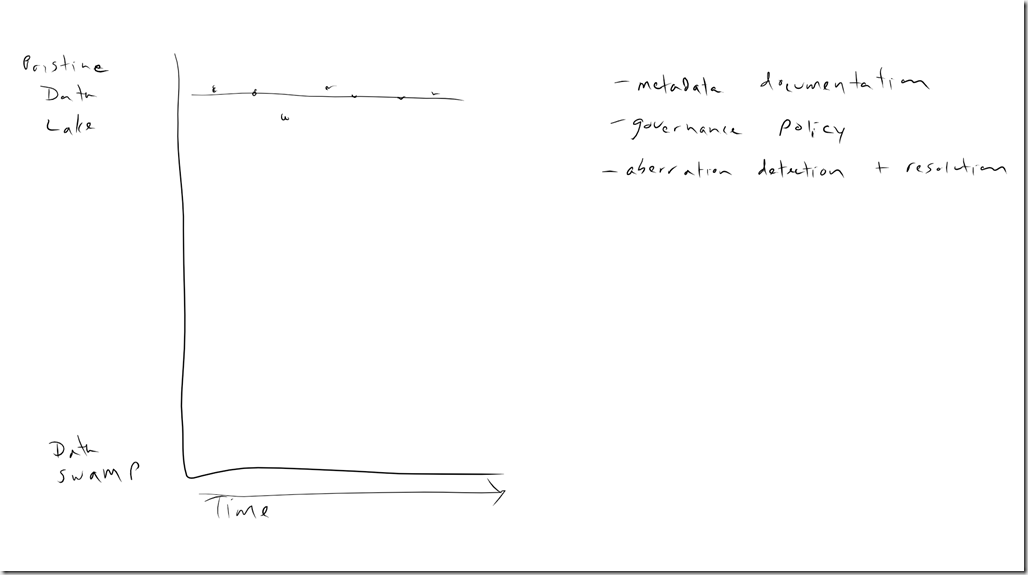

When effort is put into keeping a data lake pristine, we can imagine our data quality curve is much flatter. There will be times when the cleanliness of our data lake is affected, perhaps through personnel turnover or missed documentation–but the system can be brought back to a more pristine state with a little effort.

Additional Considerations

Just as a natural lake is divided into depth zones (limnetic, lentic, benthic, etc.), a data lake the data in a data lake needs a level of organization also. Raw data should be separated from cleansed/standardized data which should be separated from analytics-ready data. You need these different zones because, for example, customers usually don’t enter their address information in a standardized format, which could affect your analysis. Each of these zones should have a specific security profile. Not everyone needs access to all the data in the data lake. A lack of proper access permissions is a real risk.

Implement data quality and allow the time for all data to be cleansed and standardized to populate that zone. This isn’t easy, but it’s essential for accurate analysis and to ensure a pristine data lake.

It may also be beneficial to augment your raw data, perhaps with block codes or socioeconomic groups. Augmenting the original data changes the format of the original data, which may be acceptable in your design, or you may need to store standardized data in a different place with a link back to the original document.

Additional resources:

1. http://timoelliott.com/blog/2014/12/from-data-lakes-to-data-swamps.html

2. http://www.gartner.com/newsroom/id/2809117

3. http://data-informed.com/4-ways-to-avoid-a-data-swamp/

4. http://www.reltio.com/about/news/2016/4/how-to-keep-your-data-lake-from-becoming-a-data-swamp

5. https://www.ibm.com/developerworks/community/blogs/5things/entry/5_things_to_know_about_avoiding_a_data_swap_with_a_data_reservoir?lang=en

6. Zaloni Bedrock – http://www.zaloni.com/products/bedrock/

7. http://gethue.com/

8. http://www.pwc.com/us/en/technology-forecast/2014/cloud-computing/assets/pdf/pwc-technology-forecast-data-lakes.pdf

9. http://blog.zaloni.com/metadata-is-critical-for-fishing-in-the-big-data-lake

10. http://www.infoworld.com/article/2923875/big-data/3-ways-the-data-lake-is-actually-not-helping-with-it-agility.html

11. http://www.infoworld.com/article/2920116/analytics/5-ways-real-time-will-kill-data-quality.html

12. https://www.oreilly.com/ideas/tips-for-managing-metadata-in-a-data-lake